The lines of ethical principles are often blurred when it comes to embracing ubiquitous machines.

Robots can help farmers optimise agricultural production and promote sustainable farming practices. : Photo by ThisisEngineering RAEng on Unsplash Unsplash License

Robots can help farmers optimise agricultural production and promote sustainable farming practices. : Photo by ThisisEngineering RAEng on Unsplash Unsplash License

The lines of ethical principles are often blurred when it comes to embracing ubiquitous machines.

Robots will shape the cities of the future — from educating children, to cleaning streets, to protecting borders from military threats and much more. While the ubiquity of robots isn’t arriving tomorrow, it’s closer than many realise — by 2030, humanoid robots (such as personal assistant bots) are slated to exceed 244 million worldwide, soaring from 220 million in 2020.

Examples of some cities with existing robotic infrastructures are Masdar City’s Personal Rapid Transit (PRT) and The Line, a future city in Neom, Saudi Arabia, South Korea’s Songdo Waste Management system, Denmark’s Odense City collaborative robots or cobots, and Japan’s traffic navigating robots at Takeshiba District.

But the rise of robotics poses thorny ethical questions about how we govern entities that sit between the conscience of humanity and the mechanical nature of machines like a dishwasher or a lawnmower. Getting on the front foot with governance could make a huge difference by the end of the decade.

Robots can be designed to mimic humans (like humanoids or androids) and used in practically every sector: healthcare, manufacturing, logistics, space exploration, military, entertainment, hospitality and even in the home.

Robots are designed to address human limitations, being repetitively precise, long-lasting, and unswayed by emotions. They are not designed to topple the executive and seize power, contrary to what films like The Terminator might posit.

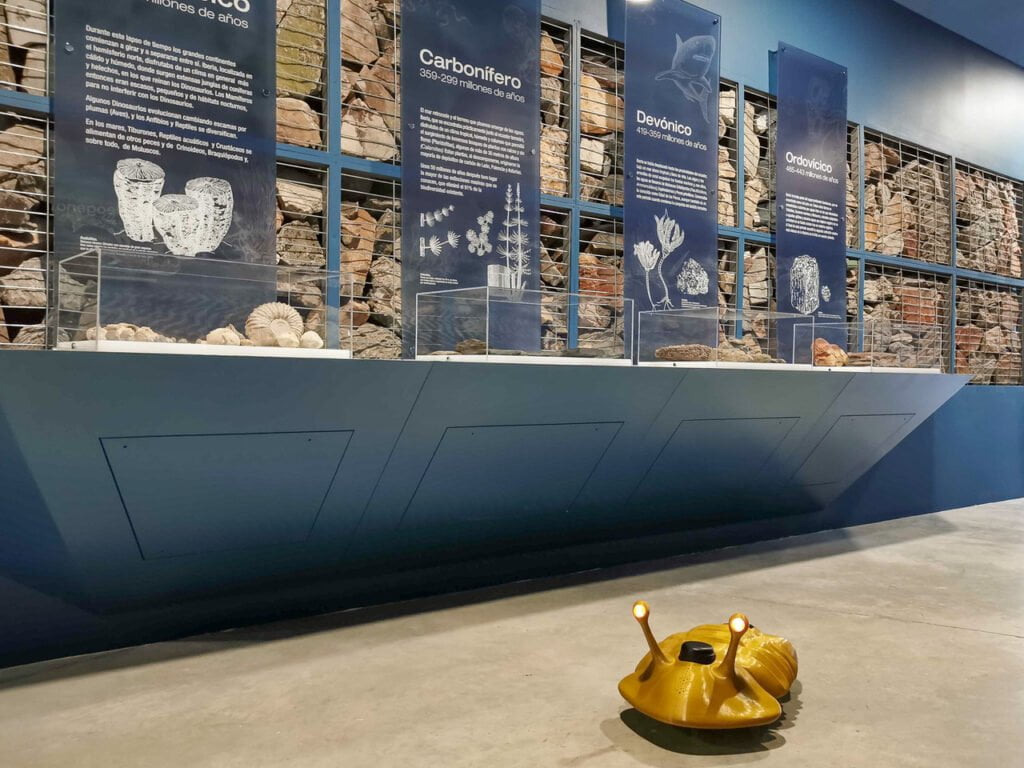

In dangerous jobs or tasks that require intensive manual labour, robots can complement or be a substitute for the human workforce. In the agriculture sector, drones have huge potential in assisting farming activities.

In early education, robots are accompanying children to learn and play. ‘Little Sophia’, a ‘robot friend’, aims to inspire children to learn about coding, AI, science, technology, engineering and maths through a safe, interactive, human-robot experience.

The rising trend of ubiquitous humanoid robots living together with humans has raised the issue of responsible tech and robot ethics.

Debates on ethical robotics that started in the early 2000s still centre on the same key issues: privacy and security, opacity/ transparency, and algorithms biases. To overcome such issues, researchers have also proposed five ethical principles, together with seven high-level messages for responsible robotics. The principles include:

- Robots should not be designed as weapons, except for national security reasons.

- Robots should be designed and operated to comply with existing laws, including privacy and security.

- Robots are products: as with other products, they should be designed to be safe and secure.

- Robots are manufactured artefacts: the illusion of emotions and intent should not be used to exploit vulnerable users.

- It should be possible to find out who is responsible for any robots.

Researchers also suggest robot city designers rethink how ethical principles like the above can be respected during the design process, such as providing off-switches. For example, having an effective control system such as actuator mechanisms and algorithms to automatically switch off the robots.

Without agreed principles, robots could present a real threat to humans. For example, the cyber threats of ransomware and DDoS attacks, the physical threats of increasingly autonomous devices and robots, and the emotional threats of being over-attached to robots, ignoring real human relationships such as depicted in the 2013 movie, ‘Her’. Other negative environmental impacts of robotics include excessive energy consumption, accelerated resource depletion, and uncontrolled electronic waste.

Cities and lawmakers will also face the emergent threat of Artificial Intelligence (AI) terrorism. From expanding autonomous drones and introducing robotic swarms, to remote attacks or disease delivery through nanorobots, law enforcement and defence organisations face a new frontier of potential threats. To prepare, future robotics, AI law and ethics research geared towards developing policy is advised.

Robots should make life better. In the face of rapid innovation, banning or stifling development are not feasible responses. The onus then falls on governments to cultivate more robot-aware citizens and responsible (licensed) robot creators. This, coupled with a proactive approach to legislation, offers cities the chance to usher in a new era of robotics with more harmony and haste.

Dr Seng Boon LIM is a smart city researcher and senior lecturer at the College of Built Environment, Perak Branch, Universiti Teknologi Mara (UiTM), Malaysia. He declares no conflict of interest. He tweets at @Sengboon_LIM.

Originally published under Creative Commons by 360info™.